Post Views: 3,763

Introduction

Large Language Models (LLMs) do not need any introduction with the rise of its massive adoption in the industry. Most enterprises have either adopted or planning to adopt an LLM to build Generative AI-based enterprise applications supporting a variety of business use cases.

While there are many closed-source options available such as – OpenAI’s GPT-3.5 or GPT-4, Google’s Gemini, etc., Open source LLMs have started getting traction because of their transparency, customizability, and cost-effectiveness. In this article, we will explore the top 5 open source LLMs for enterprises based on the information gathered from diverse insights.

Open Source LLMs

#1 – Llama 2

Llama 2 has been leading the Open source LLM since its launch in July 2023 by Meta. It offers transparency, flexibility, and cost-effectiveness for enterprise tasks with fewer requests. Llama 2 allows fine-tuning and adaptation of models for specific tasks, making it a compelling option for enterprises with key highlights such as:

- Llama 2 is free for research and commercial use.

- Llama 2 is now available on Amazon Bedrock, Google Cloud’s Vertex AI, and Microsoft Azure.

- Options to choose from lightweight to more comprehensive models with 7B, 13B, and 70B parameters.

- Fine-tuned for Chat use cases with 100K+ supervised fine-tuning.

Meta launched Purple Llama in Dec 2023 for Safe and Responsible AI Development – it is an umbrella project featuring open trust and safety tools and evaluations to help developers build responsibly with AI models.

Large Model Systems Organization (LMSYS Org) is an open research organization founded by UC Berkeley, UCSD, and CMU. They have published a fine-tuned version of Llama known as Vicuna and the response quality has been impressive:

#2 – Falcon

The Technology Innovation Institute (TII) has been pushing the boundaries of Open source LLM by launching Large Language Models – Falcon 40B launched in Mar 2023 followed by Falcon 170B in Sep 2023. It is available on Hugging Face – click here to access/download the model. Click here for the introduction session on Falcon during AWS re:Invent 2023.

Key highlights:

- Falcon Model Options: Falcon 180B, 40B, 7.5B, 1.3B parameter AI models.

- Falcon model with 180B parameters trained on 3.5 trillion tokens, with 4 times of Meta’s LLaMA 2.

- Falcon license allows developers to integrate Falcon 180B into their applications and services, and host it in their own or leased infrastructure. Note that there are restrictions in terms of monetizing the models as hosting providers.

- It is available on Amazon Sagemaker Jumpstart, and Microsoft’s Azure AI.

#3 – BLOOM

BLOOM was developed as a BigScience initiative in collaboration with researchers from 70+ countries as a global collaborative effort. It is one of the largest leading multi-lingual LLMs. Click here to access the model on Hugging Face and summary information is available here. Key highlights are:

- BLOOM is an auto-regressive Large Language Model and it has been trained to continue text from a prompt on vast amounts of text data with content from 46 languages, and 13 programming languages.

- Model Options available in Parameters: 560m, 1b, 3b, 7b, 176B

- It can be deployed using Amazon Sagemaker, and Microsoft Azure AI Model Catalog.

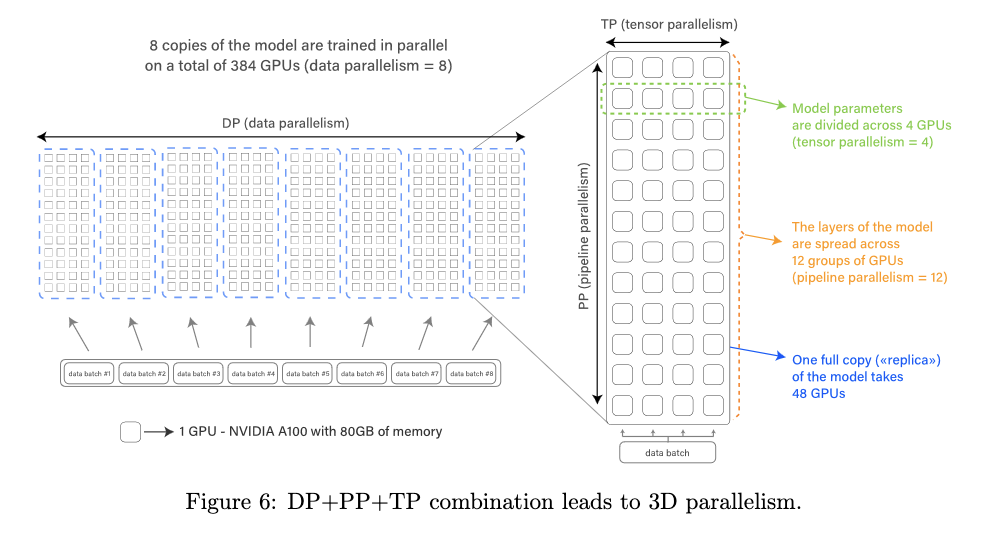

- Click here to read the research paper published by the Bloom team. BLOOM was trained using Megatron-DeepSpeed20 (Smith et al., 2022), a framework for large-scale distributed training:

- BLOOM HELM Benchmark Results are available as part of the white paper published as shown below:

#4 – MPT-30B and MPT-7B

MosiacML (an open-source startup, now acquired by Databricks) launched MPT-7B in May 2023 followed by MPT-30B in June 2023. Click here to access the model on Hugging Face. Note that Databricks has also launched Dolly 2 (LLM trained on Databricks ML Platform) in June 2023.

Key highlights are:

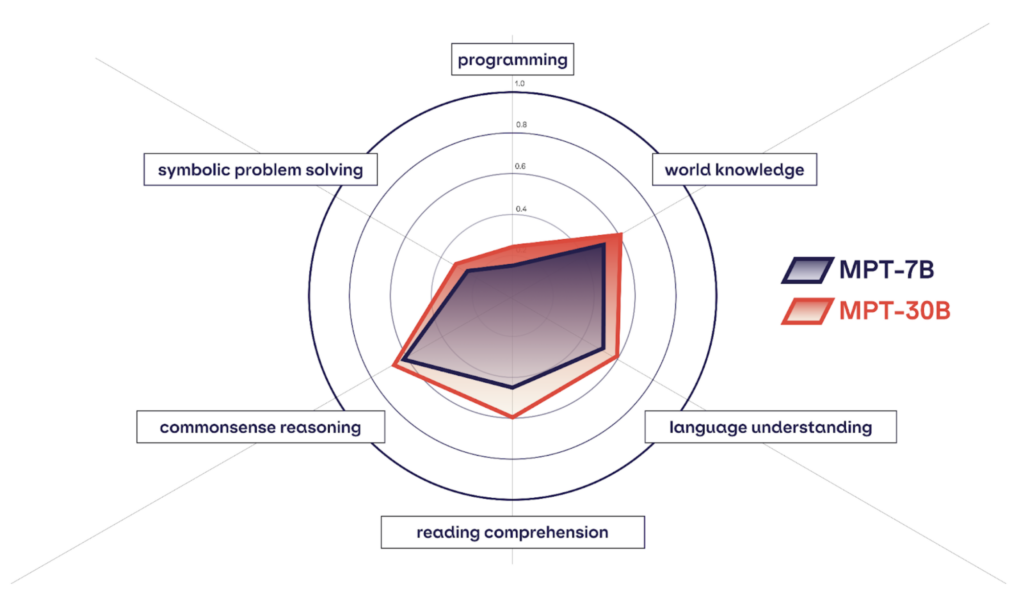

- MPT-30B outperforms GPT-3 on the smaller set of eval metrics that are available from the original GPT-3 paper. Here is a comparative view of MPT-7B and 30B across different criteria:

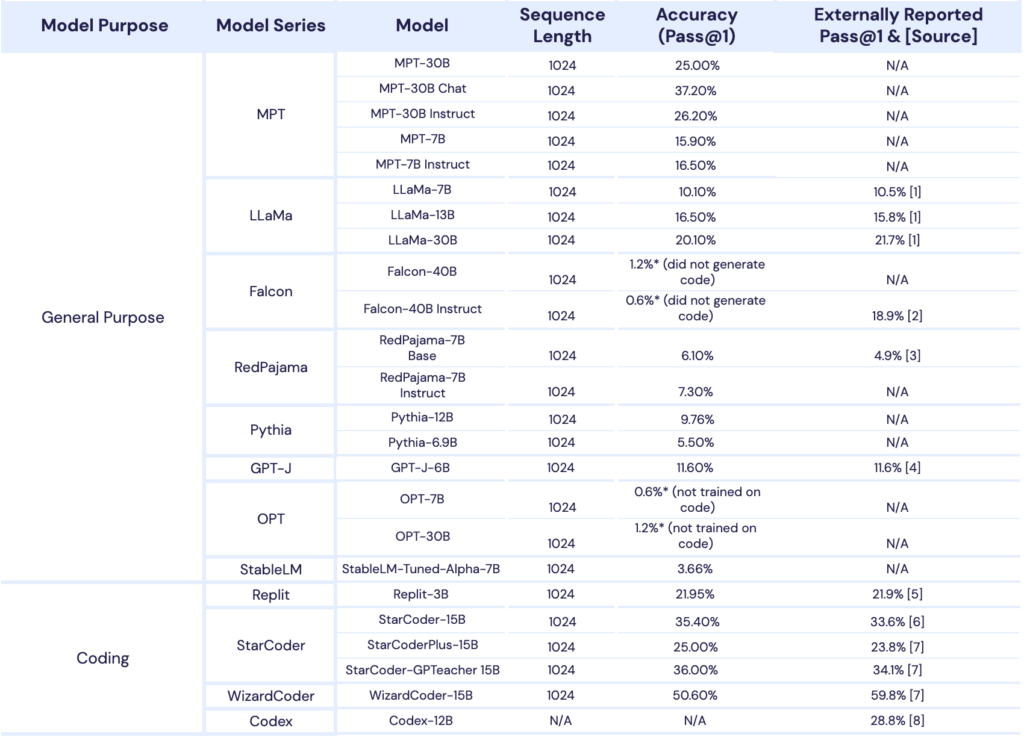

- Here is another comparative view of MPT vs. other open-source models:

#5 – Mistral 7B

Mistral AI launched Mistral 7B in September 2023 with an Apache 2.0 License with key highlights as:

- Chat completions API available by Mistral AI as:

mistral-tiny,mistral-small, andmistral-mediumwith Embedding API asmistral-embed. - It is available on Google Cloud Vertex AI, Azure AI Studio, and Amazon Sagemaker Jumpstart.

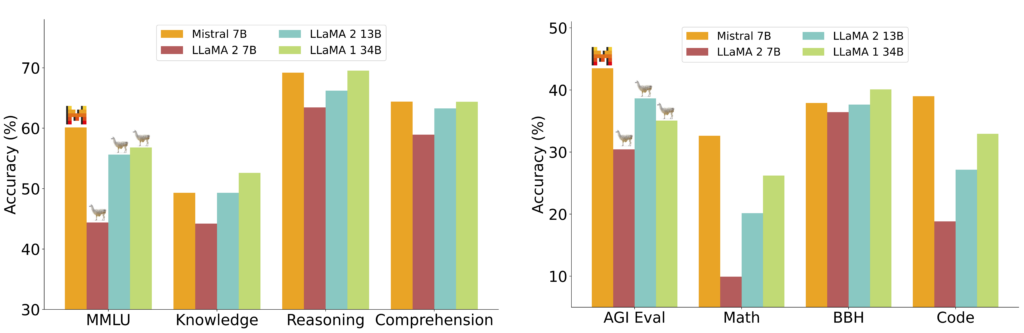

- Mistral 7B outperformed Llama 2 13B on all benchmarks, and Llama 1 34B on many benchmarks:

In addition to the above list, multiple Open-source LLMs are coming up with promising results based on performance, truthfulness, precision, and other parameters with Open-source players such as:

Hugging Face maintains an Open LLM Leaderboard to keep track of Open LLMs and Chatbots:

Conclusion

Open-source LLM models have started offering many great alternatives with options to host these models on Cloud providers such as AWS, GCP, or Azure. Choosing the appropriate LLM is not just driven by a few parameters, there are many diverse aspects driven by enterprise architecture practices and business use cases. Having said that, the emergence of alternatives via open source is a positive sentiment for the enterprise industry, particularly avoiding vendor lock-in, enhanced security & control, and transparency. The maturity of Open-source models will drive its adoption in the future and we should keep our options open by experimenting with Open-source LLMs.

References

Disclaimer:

All data and information provided on this blog are for informational purposes only. This makes no representations as to the accuracy, completeness, correctness, suitability, or validity of any information on this site. It will not be liable for any errors, omissions, or delays in this information or any losses, injuries, or damages arising from its display or use. All information is provided on an as-is basis. Artificial Intelligence has generated some parts of this blog. This is a personal opinion. The opinions expressed here represent my own and not those of my employer or any other organization.